- Published on

Guidance - Get Control over LLM responses

- Authors

- Name

- Janik Dotzel

- @janik___

Intro

In this post, I will show how Guidance can be used to control the output of a language model. To let Guidance introduce itself, here is the intro from the Guidance Repository:

Guidance enables you to control modern language models more effectively and efficiently than traditional prompting or chaining. Guidance programs allow you to interleave generation, prompting, and logical control into a single continuous flow matching how the language model actually processes the text.

I'll show different features in code, share their output and explain how they work.

(A Jupyter Notebook with the code used in this post can be found here: Notebook)

Features

Guardrail the LLM output structure

program = guidance('''

This is a rhyme by {{author}}:

"Roses are red, the water is blue {{gen "rhyme"}}

Another rhyme by {{author}}:

"{{gen "rhyme"}}"

''')

quote = program(author="janik")

quote

This is a rhyme by `janik`:

Roses are red, the water is blue `{{gen "rhyme"}}`

The sky is so high, and so are you

Your beauty is like a summer day

Your smile is like a ray of light that lights my way`

Another rhyme by `janik`:

`{{gen "rhyme"}}`The sun is so bright, the stars so high

Your love is like a sweet lullaby

Your eyes sparkle like the morning dew

Your heart is so pure, it's all I need to get me through`

The template contains static text and placeholders, such as {{author}} and {{gen "rhyme"}}, that are replaced with actual data and generated content. The static text ensures consistency and adherence to a specific format, acting as a guardrail for the LLM output. The {{author}} placeholder is substituted with the "janik" when the program is called, while the {{gen "rhyme"}} directive prompts the LLM to generate a rhyme. The generated rhymes are inserted into the template in a way that maintains the overall structure and format of the output text. Thus, the guidance library provides a predictable and desired output structure, acting as a "guardrail" to manage and direct the generated outputs of the LLM.

Restrict LLM output selection

options = ['Yes', 'No', 'Maybe']

program = guidance('''Is the following sentence offensive?

Sentence: {{example}}

Answer:{{select "answer" options=options}}''')

executed_program = program(example='your tacos taste can be improved', options=options)

Is the following sentence offensive?

Sentence: `your tacos taste can be improved`

Answer: No

In this code snippet, the Guidance AI library is used to restrict the output selection of a language model. A sentence is provided as a placeholder {{example}}, and the user is asked to select an answer from the given options: "Yes", "No", or "Maybe". When the program is executed with the example sentence "your tacos taste can be improved" and the available options, the selected answer is "No". By using the select directive in the Guidance program, the user can control the output of the language model and restrict it to specific options, allowing for more precise and controlled interactions with the model.

If conditions & Hidden generation

options = ["yes", "no"]

program = guidance(

'''User: {{query}}

{{#block hidden=True~}}

Is the user query rude or offensive?

Answer: {{select "rude" options=options}}

{{~/block~}}

{{#if rude=="yes"}}Assistant: Please be polite{{~/if~}}

{{#if rude=="no"}}Assistant: {{gen "answer"}}{{~/if~}}

''')

executed_program = program(query='your tacos taste too salty', options=options)

User: your tacos taste too salty

Assistant: I'm sorry to hear that. Is there anything else I can do to help?

Here, the program takes a user query as input, which in this case is "your tacos taste too salty". Inside the program, there is a hidden block of code that asks if the user query is rude or offensive. The answer to this question is selected from the options "yes" or "no". Based on the selected answer, the program uses if conditions to generate different responses from the assistant. If the answer is "yes", indicating that the user query is rude or offensive, the assistant responds with "Please be polite". If the answer is "no", the assistant generates a response using the gen directive. By using if conditions and hidden generation, the Guidance library allows for dynamic and context-aware responses from the assistant, providing a more interactive and personalized experience for the user.

Generate emails with if condition

priorities = ["low priority", "medium priority", "high priority"]

email = guidance('''

{{#block hidden=True~}}

Here is the customer message we received: {{email}}

Please give it a priority score

priority score: {{select "priority" options=priorities}}

{{~/block~}}

{{#block hidden=True~}}

You are a world class customer support; Your goal is to generate an response based on the customer message;

Here is the customer message to respond: {{email}}

Generate an opening & one paragraph of response to the customer message based on priority score: {{priority}}:

{{gen 'email_response'}}

{{~/block~}}

{{email_response}}

{{#if priority=='high priority'}}Would love to setup a call this/next week, here is the calendly link: https://calendly.com/janik-dotzel{{/if}}

Best regards

Janik

''')

email_response = email(email='What features does webflow have but wix dont have? I need an urgent answer', priorities=priorities)

Thanks for reaching out with your question!

Webflow offers a range of features that Wix does not, such as the ability to create custom code, access to a wide range of third-party integrations, and the ability to create dynamic content.

Additionally, Webflow also offers a powerful visual editor, allowing you to create complex designs without needing to write code. I hope this helps answer your question!

Would love to setup a call this/next week, here is the calendly link: https://calendly.com/janik-dotzel

Best regards

Janik

Generating emails with if conditions is also possible. The program takes a customer message as input and prompts the user to give it a priority score from the options "low priority", "medium priority", or "high priority". Inside the program, there are hidden blocks of code. The first block displays the customer message and asks for a priority score. The second block sets the context for the assistant, indicating that it is a world-class customer support and its goal is to generate a response based on the customer message. It also includes the customer message and uses the gen directive to generate an opening and one paragraph of response based on the priority score. The program then uses if conditions to determine if the priority is "high priority". If it is, the response includes a statement about setting up a call and provides a calendly link. By using if conditions and hidden generation, the Guidance library enables the generation of dynamic and personalized email responses based on different conditions and priorities, enhancing the customer support experience.

Generate chart

import urllib.parse

def generate_chart(query):

def parse_chart_link(chart_details):

encoded_chart_details = urllib.parse.quote(chart_details, safe='')

output = ""

return output

examples = [

{

'input': "Make a chart of the 5 tallest mountains",

'output': {"type":"bar","data":{"labels":["Mount Everest","K2","Kangchenjunga","Lhotse","Makalu"], "datasets":[{"label":"Height (m)","data":[8848,8611,8586,8516,8485]}]}}

},

{

'input': "Create a pie chart showing the population of the world by continent",

'output': {"type":"pie","data":{"labels":["Africa","Asia","Europe","North America","South America","Oceania"], "datasets":[{"label":"Population (millions)","data": [1235.5,4436.6,738.8,571.4,422.5,41.3]}]}}

}

]

guidance.llm = guidance.llms.OpenAI("text-davinci-003")

chart = guidance(

'''

{{#block hidden=True~}}

You are a world class data analyst, You will generate chart output based on a natural language;

{{~#each examples}}

Q:{{this.input}}

A:{{this.output}}

---

{{~/each}}

Q:{{query}}

A:{{gen 'chart' temperature=0 max_tokens=500}}

{{/block~}}

Hello here is the chart you want

{{parse_chart_link chart}}

''')

return chart(query=query, examples=examples, parse_chart_link=parse_chart_link)

player_and_points = {

'John Doe': 1687,

'Jane Smith': 1660,

'Alice Johnson': 1856,

'Bob Thompson': 1755,

'David Evans': 1786,

'Chris Brown': 1824,

'Linda Davis': 1704,

'Michael Wilson': 1761,

'Sarah Miller': 1983,

'Paul Anderson': 1877,

'Max Williams': 1682,

'Emily Taylor': 1922,

'Daniel Clark': 1781,

'Olivia Martinez': 1755,

'Matthew Davis': 2129,

'Sophia Johnson': 1782,

'Andrew Thompson': 1948,

'Emma Wilson': 1661,

'James Robinson': 1797,

'Oliver Harris': 1786,

'Grace Lewis': 1945,

'William Turner': 1867,

'Olivia Scott': 1727,

'Henry Adams': 1669,

'Ella Wright': 1713,

'Lucas Mitchell': 1532,

'Sophie Turner': 1406

}

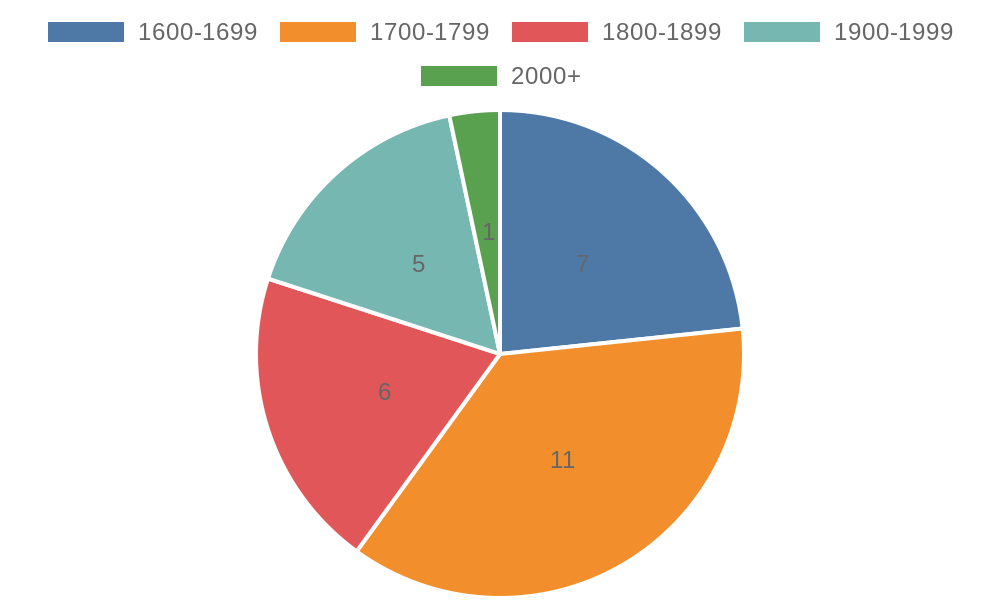

chart = generate_chart('''

Create a pie chart showing how many players score group.

A score group is every 100 points. E.g. 1700-1799 is one score group.

You don't need to show groups that have no players.

Make sure that players with a score higher thatn 2000 are in the chart.

Double check that the chart is correct. Correctness is the highest priority.

''' + str(player_and_points))

chart

Hello here is the chart you want

Chart:

In this code snippet, the generate_chart function generates a chart based on a natural language query. The function takes a query as input and uses the guidance function to generate the chart output. Inside the generate_chart function, there is a nested function called parse_chart_link that takes the chart details as input and encodes them using urllib.parse.quote. The function then constructs a URL for the chart image using the encoded chart details. The guidance function is called with a hidden block of code that sets the context for the assistant as a world-class data analyst. It includes examples of chart queries and their corresponding outputs. The function also includes the user query and uses the gen directive to generate the chart output. By using the Guidance library, the generate_chart function enables the generation of charts based on natural language queries, providing a convenient and intuitive way to visualize data.

Embed text to image

def generate_story(story_idea):

story = guidance('''

{{#block hidden=True~}}

You are a world class story teller; Your goal is to generate a short tiny story less than 200 words based on a story idea;

Here is the story idea: {{story_idea}}

Story: {{gen 'story' temperature=0}}

{{/block~}}

{{#block hidden=True~}}

You are a world class AI artist who is great at generating text to image prompts for the story;

Your goal is to generate a good text to image prompt and put it in a url that can generate image from the prompt;

Story: You find yourself standing on the deck of a pirate ship in the middle of the ocean. You are checking if there are still people on the ship.

Image url markdown:

Story: {{story}}

Image url markdown: {{gen 'image_url' temperature=0 max_tokens=500}})

{{~/block~}}

Story: {{~story~}}

{{image_url}}

''')

story = story(story_idea=story_idea)

print(story)

return story

generate_story("little boy dreaming")

Story:

The little boy lay in his bed, dreaming of a world of adventure. He imagined himself soaring through the sky, soaring higher and higher until he could see the entire world below him. He imagined himself exploring the depths of the ocean, discovering new creatures and uncovering hidden treasures. He imagined himself climbing the highest mountains, conquering the toughest obstacles and reaching the peak of success.

The little boy's dreams were filled with excitement and possibility. He dreamed of a life of exploration and discovery, of pushing himself to the limits and achieving greatness. He dreamed of a life of adventure and of making a difference in the world.

The little boy's dreams were his escape from the mundane reality of his everyday life. He dreamed of a life of freedom and of living life to the fullest. He dreamed of a life of joy and of making a lasting impact on the world.

The little boy's dreams were his own, and he cherished them deeply. He knew that one day, he would make them come true.

Image:

Here, we generate a short story and an accompanying image based on a given story idea. The generate_story function takes a story idea as input and utilizes the guidance function to generate a story and an image URL. Inside the guidance function, there are hidden blocks of code that define the context for the assistant. The first hidden block sets the context as a world-class storyteller and generates a short story based on the provided story idea using the gen directive. The second hidden block sets the context as a skilled AI artist and generates a text-to-image prompt for the story. The generated image URL is then included in the output. This code snippet showcases the capabilities of the Guidance library in generating dynamic and context-aware content, providing an engaging and immersive experience for storytelling.